Overview

Webflow provides built-in tools to manage your robots.txt file and control which bots can access your website. This guide explains how to configure bot access for your Webflow site.

Accessing Webflow SEO Settings

1. Open Your Project

Log into your Webflow account and open the project where you want to configure bot access.

2. Navigate to Site Settings

Click on the project settings icon in the left sidebar, or access it through the top menu. Select the site you want to configure if you have multiple sites in your project.

3. Go to SEO Tab

In the site settings panel, click on the "SEO" tab. This section contains all SEO-related configurations including robots.txt management.

Configuring robots.txt in Webflow

Enable Auto-Generated robots.txt

Webflow automatically generates a robots.txt file for published sites. You can customize it by adding custom rules.

1. Find the robots.txt Section

Scroll down in the SEO settings until you find "Auto-generate sitemap" and "robots.txt" options.

2. Add Custom robots.txt Rules

Click on "Add custom robots.txt rules" or "Edit robots.txt" depending on your Webflow plan. This opens a text editor where you can add your custom directives.

3. Configure User Agent Permissions

Add rules to allow specific bots to crawl your site:

User-agent: ChatGPT-User

Allow: /

User-agent: GPTBot

Allow: /

User-agent: ClaudeBot

Allow: /4. Keep Default Rules

Webflow includes some default rules for common directories. You can keep these or modify them as needed:

User-agent: *

Disallow: /admin

Disallow: /checkoutExample: Allowing ChatRankBot

To allow ChatRankBot to crawl your Webflow site, add the following to your robots.txt configuration:

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /Complete Example Configuration

Here's a full robots.txt example with multiple bots:

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /

User-agent: ChatGPT-User

Allow: /

User-agent: GPTBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: Googlebot

Allow: /

User-agent: *

Disallow: /admin

Disallow: /checkoutPublishing Your Changes

After configuring your robots.txt rules:

Click "Save" in the settings panel

Publish your site for the changes to take effect

Changes are live immediately after publishing

Avoiding Rate Limiting During Scraping

Webflow's infrastructure is robust, but proper rate limiting configuration ensures optimal performance and prevents potential issues.

Configure Crawl-delay

Add a crawl-delay directive to specify how long bots should wait between requests:

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /

Crawl-delay: 2Webflow-Specific Rate Limiting Considerations

Webflow Hosting Tiers: Different plans have different capacities:

- Free/Basic sites: Use crawl-delay of 3-5 seconds

- CMS sites: Use crawl-delay of 2-3 seconds

- Business/Enterprise sites: Use crawl-delay of 1-2 seconds

CDN Benefits: Webflow uses a global CDN which handles traffic efficiently. This means your site can typically handle faster crawl rates than self-hosted solutions.

Set Different Delays for Different Bots

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /

Crawl-delay: 2

User-agent: GPTBot

Allow: /

Crawl-delay: 3

User-agent: Googlebot

Allow: /

Crawl-delay: 1

User-agent: *

Allow: /

Crawl-delay: 5Monitor Crawl Activity

Use Webflow's analytics and site activity monitoring:

Go to Site Settings → Analytics

Check for unusual traffic patterns

Look for spikes in bot activity

Adjust crawl-delay values if needed

Consider Your CMS Collection Size

If you have a large CMS collection (hundreds or thousands of items):

- Set longer crawl-delays to prevent overwhelming your site

- Consider allowing bots to crawl during off-peak hours

- Monitor form submissions and dynamic content generation

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /blog/

Allow: /products/

Crawl-delay: 3Webflow Bandwidth Considerations

Webflow plans include bandwidth limits:

- Free plan: 1 GB

- Basic: 50 GB

- CMS: 200 GB

- Business: 400 GB

- Enterprise: Custom

Aggressive bot crawling can consume bandwidth. Monitor usage in Site Settings → Billing & Plans.

Contact Bot Operators

If a bot is consuming excessive bandwidth or resources, contact them through the URL in their user agent string (like https://chatrank.ai/bot for ChatRankBot) to request:

- Slower crawl rates

- Scheduled crawls during specific times

- Exclusion from certain high-bandwidth pages

Advanced Configuration

Allowing Specific Pages

To allow bots to access specific pages or sections:

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /blog/

Allow: /articles/

Crawl-delay: 2

User-agent: *

Disallow: /members-only/Combining Allow and Disallow Rules

You can create more complex rules that allow certain bots while restricting others:

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /

Crawl-delay: 2

User-agent: BadBot

Disallow: /

User-agent: *

Allow: /

Crawl-delay: 5

Disallow: /adminProtecting Dynamic Content

If you have forms or dynamic content that generates server load:

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /

Disallow: /search

Disallow: /forms/submit

Crawl-delay: 2Verifying Your robots.txt

Check that your robots.txt file is correctly configured:

Visit

https://yourdomain.com/robots.txtin a browserVerify that your custom rules appear in the file

Check that the syntax is correct (no typos or formatting errors)

Use Google Search Console's robots.txt tester for validation

Webflow Plans and Limitations

Note that robots.txt customization may be limited on free Webflow plans. Ensure you have a paid site plan to access full robots.txt editing capabilities.

Sitemap Integration

Webflow automatically includes your sitemap location in robots.txt:

Sitemap: https://yourdomain.com/sitemap.xmlThis helps bots discover all your pages more efficiently.

Complete Example with Rate Limiting

Here's a comprehensive robots.txt configuration optimized for Webflow:

# ChatRankBot with moderate crawl delay

User-agent: ChatRankBot/1.0 (+https://chatrank.ai/bot)

Allow: /

Crawl-delay: 2

# AI crawlers with slightly longer delay

User-agent: ChatGPT-User

Allow: /

Crawl-delay: 3

User-agent: GPTBot

Allow: /

Crawl-delay: 3

User-agent: ClaudeBot

Allow: /

Crawl-delay: 3

# Search engines with minimal delay (Webflow's CDN handles these well)

User-agent: Googlebot

Allow: /

Crawl-delay: 1

User-agent: Bingbot

Allow: /

Crawl-delay: 1

# Default for all other bots

User-agent: *

Allow: /

Crawl-delay: 5

Disallow: /admin

Disallow: /checkout

Disallow: /search

Sitemap: https://yourdomain.com/sitemap.xmlBest Practices for Webflow Sites

- Test changes on a staging domain before applying to production

- Keep your robots.txt rules simple and clear

- Document why you're allowing or blocking specific bots

- Review your robots.txt quarterly to ensure rules are still relevant

- Monitor bandwidth usage in Webflow analytics

- Use Webflow's built-in analytics to track bot behavior

- Set appropriate crawl-delay values based on your plan tier

Troubleshooting

If bots aren't respecting your robots.txt:

- Confirm the file is published and accessible

- Check for syntax errors in your rules

- Clear your Webflow site cache

- Wait 24-48 hours for bots to recrawl your robots.txt

- Check Webflow's status page for any hosting issues

- Review analytics for actual bot behavior vs. expected behavior

If bots are causing performance issues:

- Increase crawl-delay values across all user agents

- Contact aggressive bot operators via their provided URLs

- Consider temporarily blocking problematic bots

- Review your Webflow plan bandwidth limits

- Upgrade to a higher-tier plan if consistently hitting limits

- Use Webflow's support team for persistent bot issues

Additional Resources

For more information about bot behavior and crawl management, reference the contact URLs provided in user agent strings. Most reputable bots (like ChatRankBot at https://chatrank.ai/bot) provide documentation and support channels for webmasters.

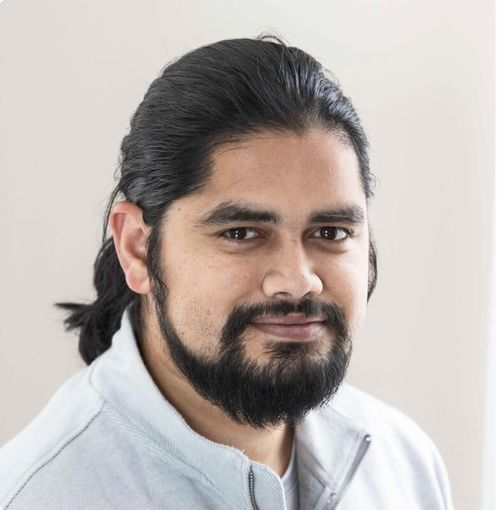

We’ve been using ChatRank for 34 days, and following their plan, we’ve actually grown over 30% in search visibility

ChatRank helped us go from zero visibility to ranking #2 in a core prompt for our business with only one new blog post!